Azure Virtual WAN Routing

First post!

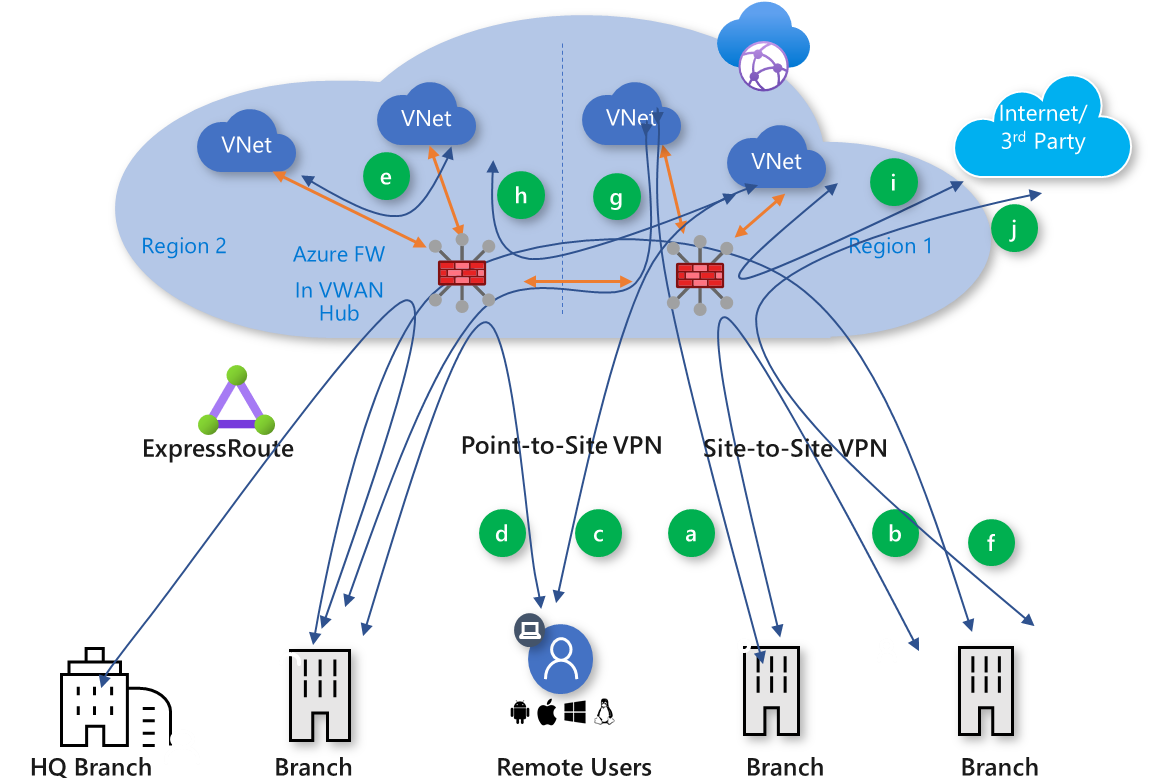

In this post I’ll show how a virtual WAN multihub routing setup can be done using Azure Firewall and site-site VPN gateway for interhub traffic.

The Setting

A summary of the setting:

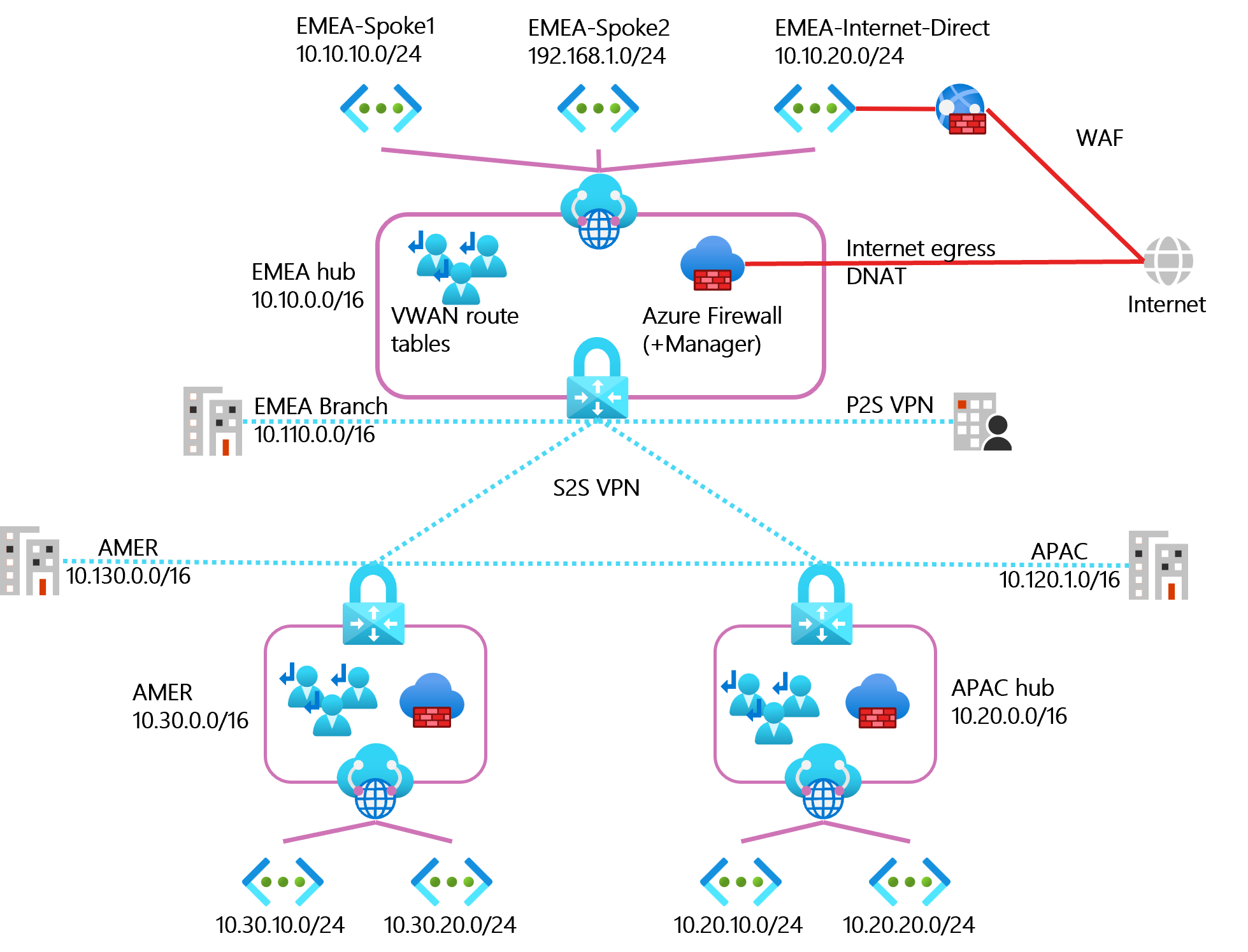

- An organization that has branch offices in multiple regions, for this post just using: EMEA, APAC, AMER

- Virtual WAN must be used as network backbone with one (but could be moremore) hubs in each region.

- Each VWAN Hub will have a dedicated Azure Firewall (will handle both internet ingress/egress and internal traffic)

- Each VWAN Hub has multiple spokes.

- Branch offices connected to the closest VWAN hub using Site-to-site VPN (not using ExpressRoute)

| Hub | Vhub range | vnets | Branch office range |

|---|---|---|---|

| EMEA | 10.10.0.0/16 | 10.10.10.0/24, 10.10.20.0/24, 192.168.1.0/24 | 10.110.0.0/16 |

| APAC | 10.20.0.0/16 | 10.20.10.0/24, 10.20.20.0/24 | 10.120.0.0/16 |

| AMER | 10.30.0.0/16 | 10.30.10.0/24, 10.30.20.0/24 | 10.130.0.0/16 |

The Problem

- Virtual WAN comes in two flavours: Basic and Standard. In a Standard Virtual WAN, all hubs are connected in full mesh. No additional configuration needs to be done or be disabled/enabled to obtain meshed hubs. This makes it super easy to use the Microsoft backbone for any-to-any (any spoke) connectivity. In Basic Virtual WAN, hubs are not meshed.

- A firewall will need to be deployed and this can either be the native Azure Firewall using Firewall policies or one of the approved 3.party appliances that can be deployed in the hub. Once a firewall or NVA has been deployed, routing must be configured to support the scenarios listed above.

- There is an option to configure this as a secure hub which is when with associated security and routing policies configured by Azure Firewall Manager.

- Because Inter-hub processing of traffic via firewall is currently not supported. Traffic between hubs will be routed to the proper branch within the secured virtual hub, however traffic will bypass the Azure Firewall in each hub. Additionally when using the Secure hub setup, having a WAF in a spoke with Internet direct routing also becomes not as easy. The internet direct option is important because if the routing is not strictly controlled it is an awesome way to crate asymmetric routing resulting in you site not working. The Zero trust setup as listed in the link below where the WAF comes before the AzFW (Premium sku with IDPS) is what will the goal is.

- Virtual WAN has a default route table. All VPN, ExpressRoute, and User VPN connections are associated to the same (default) route table. By default, all connections are associated to a Default route table in a virtual hub. Each virtual hub has its own Default route table, which can be edited to add a static route(s). Routes added statically take precedence over dynamically learned routes for the same prefixes. This behaviour will result in traffic bypassing the firewall (unless configured using Secure hub/firewall manager policies)

Some further reading:

- About virtual hub routing

- Secure traffic between Application Gateway and backend pools

- Firewall and Application Gateway for virtual networks

- Zero-trust network for web applications with Azure Firewall and Application Gateway

- What is a secured virtual hub?

- Scenario: Azure Firewall - custom

The Goal

Must be able to support the following scenarios:

- Spoke-to-Spoke traffic within the same region must be routed through a firewall

- Spoke-to-Spoke traffic in separate regions must be routed through a firewall

- Spoke-to-Internet standard setting should be to route traffic through a firewall

- Spoke-to-Internet-Direct must be routed through a Web Application Firewall.

- Internet-to-Spoke with NAT capabilities

- Branch office-to-spokes traffic must be routed through a firewall

- Branch office must be able to reach spokes in other regions

- Client VPN using Azure VPN Gateway must be routed through firewall

Inter-VNET traffic would in this case be restricted using NSGs and ASGs

Basically all traffic going through firewalls in each hub :

Read more about it: Global transit network architecture

The Workaround (at least one way to do it)

As far as I can tell, after checking logs collected from outbound and inbound Azure Firewall along with enabling/disabling VPN connections to test connectivity. the workaround described below does appear work as expected. Note that when reviewing logs from Azure Firewall (typically in Log Analytics) ping is logged, but needs to be search for a bit differently than normal UDP/TCP traffic.

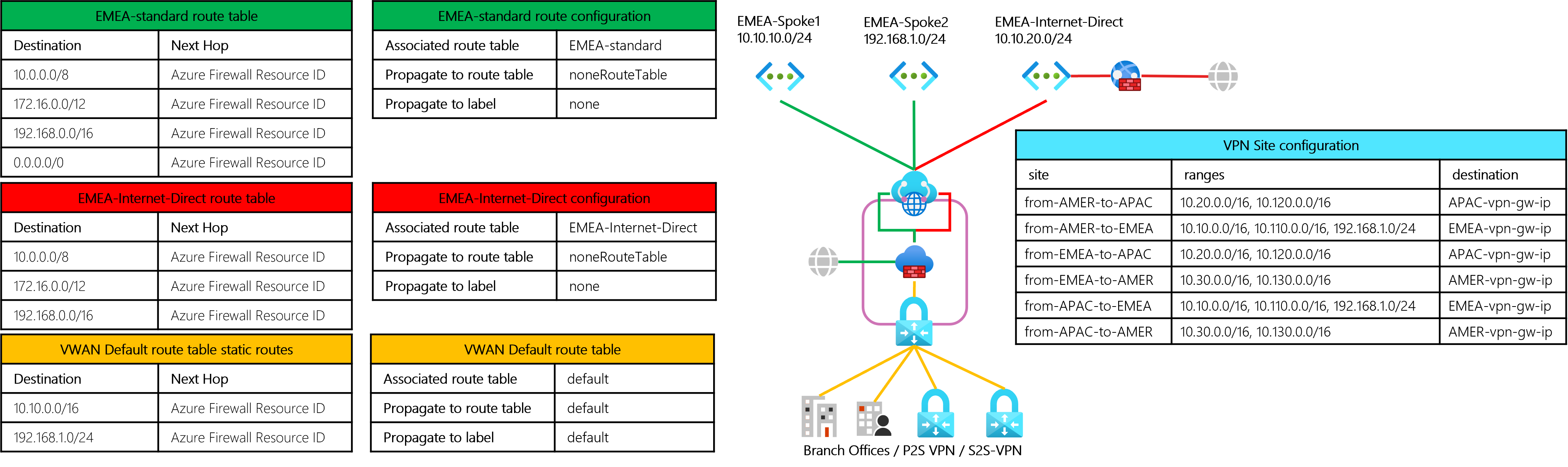

Route table configurations

The routing configuration below will need to be done for each hub with ranges updated accordingly. EMEA region used in the example below.

-

Default vnet routing - Create a route table that will be the new default route table association. Use a sensible name like emea-standard or emea-default. For practical reasons the naming convention probably should deviate from other established naming conventions (for sanity when looking at in the portal)

This will send spoke-to-spoke/spoke-to-internet/spoke-to-branch/spoke-to-other_region through the firewall.

Destination type destination next hop comment CIDR 10.0.0.0/8 Azure Firewall Resource Id RFC 1918 - private ip ranges CIDR 172.16.0.0/12 Azure Firewall Resource Id RFC 1918 - private ip ranges CIDR 192.168.0.0/16 Azure Firewall Resource Id RFC 1918 - private ip ranges CIDR 0.0.0.0/0 Azure Firewall Resource Id anything else not listed below including internet This route table should not be associated across all connections.

This route table should not propagate routes from the connections to the default route table.

When configuring the connection, propagate to the “noneRouteTable” route table (using lable “none”). -

Internet direct routing - Create a route table that will be used for VNETs that need to route directly outbound to the internet, typically VNETs where WAF is used that has a backend in a different spoke. Again, for sanity use a simple name that provides easy to understand meaning and context when looking at the route tables in the portal.

This will send spoke-to-spoke/spoke-to-branch/spoke-to-other_region through the firewall.

Destination type destination next hop comment CIDR 10.0.0.0/8 Azure Firewall Resource Id RFC 1918 - private ip ranges CIDR 172.16.0.0/12 Azure Firewall Resource Id RFC 1918 - private ip ranges CIDR 192.168.0.0/16 Azure Firewall Resource Id RFC 1918 - private ip ranges The VNET will in this scenario route 0.0.0.0/0 directly to internet back through the WAF (in this case)

This route table should not be associated across all connections.

This route table should not propagate routes from the connections to the default route table. When configuring the connection, propagate to the “noneRouteTable” route table (using label “none”) -

VPN connections - all VPN connections as assigned the VWAN default route table. This will send traffic belonging to a spesific hub and the connected VNETs to the firewall.

Destination type destination next hop comment CIDR 10.10.0.0/16 Azure Firewall Resource Id The ranges used for this hub.

Change this to reflect local hub ranges in other regionsCIDR 192.168.1.0/24 Azure Firewall Resource Id try to use as broad range as possible.

However if this overlaps with ranges coming in over VPN the ranges need to be more spesific then VPN rangesThe default route table should be associated across all connections.

This route table should propagate routes from the connections to the default route table.

Having small VNETs that are not part of the larger range for a given region does add overhead.

Point-to-site/client VPN deployed in a hub would also have to have the ranges added if they fall outside of the ranges set for a given defined region-range.

Interhub routing and connectivity

Using the Virtual WAN mesh interhub connectivity feature does not work when sending traffic to the firewalls, connecting each hub using a site-to-site VPN gateway is an option. I have not tested this but using ExpressRoute would probably also work, granted the correct SKU was used that allowed for ExpressRoute Global Reach etc.

When configuring the VPN sites make sure to include the VHub local range(s) as well as the on-prem ranges that need to be routed to. Below is a table illustrating the site-to-site vpn configuration. Naming of the sites/connections is confusing and, again for this context a name that provides a clearer understanding of the configuration is more important than using what would end up as a long and non-practical name if a normal naming convention was followed. (I am a big fan of naming conventions and should always be used, but adapted to context)

| Site | ranges | destination | Comment |

|---|---|---|---|

| from-AMER-to-APAC | 10.20.0.0/16, 10.120.0.0/16 | APAC-vpn-gw-ip | configured in the AMER vhub |

| from-AMER-to-EMEA | 10.10.0.0/16, 10.110.0.0/16, 192.168.1.0/24 | EMEA-vpn-gw-ip | configured in the AMER vhub |

| from-EMEA-to-APAC | 10.20.0.0/16, 10.120.0.0/16 | APAC-vpn-gw-ip | configured in the EMEA vhub |

| from-EMEA-to-AMER | 10.30.0.0/16, 10.130.0.0/16 | AMER-vpn-gw-ip | configured in the EMEA vhub |

| from-APAC-to-EMEA | 10.10.0.0/16, 10.110.0.0/16, 192.168.1.0/24 | EMEA-vpn-gw-ip | configured in the APAC vhub |

| from-APAC-to-AMER | 10.30.0.0/16, 10.130.0.0/16 | AMER-vpn-gw-ip | configured in the APAC vhub |

NOTE: If using the VPN Gateway for Branch office connectivity and there are multiple branch office locations, each location will require an additional site in the hub used for site-to-site connectivity. As all VPN connections should be associated with and should propagate to the default route table, azure will send traffic belonging to another region across the site-to-site connection.

This also handles the branch-to-cross-region routing and connectivity. (As long as the VPN gateways include the spoke-ranges and branch office ranges the hub gateway connects to). This will send traffic belonging to a specific hub and the connected VNETs to the firewall. Having small VNETs that are not part of the larger range for a given region does add overhead.

I have not tested branch-to-branch over vwan(site-to-site vpn), but go for it (Just YoloIT-it in fact! no… stop, breathe, think before first)

NAT (not using Azure Firewall DNAT)

Azure firewall supports DNAT and will work without any further configuration. I have not tested using a NAT Gateway, but I would expect that for the gateway to function as expected using the internet direct route table would be the way to go.

All together now

Summary of the solutions discussed above.

The Future

I have not tested this yet but “Hub Routing Intent” is meant to help solve some of these difficulties with routing in virtual wan.

It is currently in preview and is also limited to hubs within the same Azure region. Read more about it: How to configure Virtual WAN Hub routing intent and routing policies

The End

Hope this helps!

Any questions, comments etc. send it to: always.blame.teamNetwork@yoloit.no

(what, no, never blame team Network!)